feat: 切换后端至PaddleOCR-NCNN,切换工程为CMake

1.项目后端整体迁移至PaddleOCR-NCNN算法,已通过基本的兼容性测试 2.工程改为使用CMake组织,后续为了更好地兼容第三方库,不再提供QMake工程 3.重整权利声明文件,重整代码工程,确保最小化侵权风险 Log: 切换后端至PaddleOCR-NCNN,切换工程为CMake Change-Id: I4d5d2c5d37505a4a24b389b1a4c5d12f17bfa38c

This commit is contained in:

70

3rdparty/ncnn/tools/pnnx/CMakeLists.txt

vendored

Normal file

70

3rdparty/ncnn/tools/pnnx/CMakeLists.txt

vendored

Normal file

@@ -0,0 +1,70 @@

|

||||

|

||||

if(NOT CMAKE_VERSION VERSION_LESS "3.15")

|

||||

# enable CMAKE_MSVC_RUNTIME_LIBRARY

|

||||

cmake_policy(SET CMP0091 NEW)

|

||||

endif()

|

||||

|

||||

project(pnnx)

|

||||

cmake_minimum_required(VERSION 3.12)

|

||||

|

||||

if(POLICY CMP0074)

|

||||

cmake_policy(SET CMP0074 NEW)

|

||||

endif()

|

||||

|

||||

if(MSVC AND NOT CMAKE_VERSION VERSION_LESS "3.15")

|

||||

option(PNNX_BUILD_WITH_STATIC_CRT "Enables use of statically linked CRT for statically linked pnnx" OFF)

|

||||

if(PNNX_BUILD_WITH_STATIC_CRT)

|

||||

# cmake before version 3.15 not work

|

||||

set(CMAKE_MSVC_RUNTIME_LIBRARY "MultiThreaded$<$<CONFIG:Debug>:Debug>")

|

||||

endif()

|

||||

endif()

|

||||

|

||||

list(APPEND CMAKE_MODULE_PATH "${CMAKE_CURRENT_SOURCE_DIR}/cmake")

|

||||

include(PNNXPyTorch)

|

||||

|

||||

# c++14 is required for using torch headers

|

||||

set(CMAKE_CXX_STANDARD 14)

|

||||

|

||||

#set(CMAKE_BUILD_TYPE debug)

|

||||

#set(CMAKE_BUILD_TYPE relwithdebinfo)

|

||||

#set(CMAKE_BUILD_TYPE release)

|

||||

|

||||

option(PNNX_COVERAGE "build for coverage" OFF)

|

||||

|

||||

#set(Torch_INSTALL_DIR "/home/nihui/.local/lib/python3.9/site-packages/torch" CACHE STRING "")

|

||||

#set(Torch_INSTALL_DIR "/home/nihui/osd/pnnx/pytorch-v1.10.0/build/install" CACHE STRING "")

|

||||

#set(Torch_INSTALL_DIR "/home/nihui/osd/pnnx/libtorch" CACHE STRING "")

|

||||

set(TorchVision_INSTALL_DIR "/home/nihui/osd/vision/build/install" CACHE STRING "")

|

||||

|

||||

#set(Torch_DIR "${Torch_INSTALL_DIR}/share/cmake/Torch")

|

||||

set(TorchVision_DIR "${TorchVision_INSTALL_DIR}/share/cmake/TorchVision")

|

||||

|

||||

find_package(Python3 COMPONENTS Interpreter Development)

|

||||

|

||||

PNNXProbeForPyTorchInstall()

|

||||

find_package(Torch REQUIRED)

|

||||

|

||||

find_package(TorchVision QUIET)

|

||||

|

||||

message(STATUS "Torch_VERSION = ${Torch_VERSION}")

|

||||

message(STATUS "Torch_VERSION_MAJOR = ${Torch_VERSION_MAJOR}")

|

||||

message(STATUS "Torch_VERSION_MINOR = ${Torch_VERSION_MINOR}")

|

||||

message(STATUS "Torch_VERSION_PATCH = ${Torch_VERSION_PATCH}")

|

||||

|

||||

if(Torch_VERSION VERSION_LESS "1.8")

|

||||

message(FATAL_ERROR "pnnx only supports PyTorch >= 1.8")

|

||||

endif()

|

||||

|

||||

if(TorchVision_FOUND)

|

||||

message(STATUS "Building with TorchVision")

|

||||

add_definitions(-DPNNX_TORCHVISION)

|

||||

else()

|

||||

message(WARNING "Building without TorchVision")

|

||||

endif()

|

||||

|

||||

include_directories(${TORCH_INCLUDE_DIRS})

|

||||

|

||||

add_subdirectory(src)

|

||||

|

||||

enable_testing()

|

||||

add_subdirectory(tests)

|

||||

658

3rdparty/ncnn/tools/pnnx/README.md

vendored

Normal file

658

3rdparty/ncnn/tools/pnnx/README.md

vendored

Normal file

@@ -0,0 +1,658 @@

|

||||

# PNNX

|

||||

PyTorch Neural Network eXchange(PNNX) is an open standard for PyTorch model interoperability. PNNX provides an open model format for PyTorch. It defines computation graph as well as high level operators strictly matches PyTorch.

|

||||

|

||||

# Rationale

|

||||

PyTorch is currently one of the most popular machine learning frameworks. We need to deploy the trained AI model to various hardware and environments more conveniently and easily.

|

||||

|

||||

Before PNNX, we had the following methods:

|

||||

|

||||

1. export to ONNX, and deploy with ONNX-runtime

|

||||

2. export to ONNX, and convert onnx to inference-framework specific format, and deploy with TensorRT/OpenVINO/ncnn/etc.

|

||||

3. export to TorchScript, and deploy with libtorch

|

||||

|

||||

As far as we know, ONNX has the ability to express the PyTorch model and it is an open standard. People usually use ONNX as an intermediate representation between PyTorch and the inference platform. However, ONNX still has the following fatal problems, which makes the birth of PNNX necessary:

|

||||

|

||||

1. ONNX does not have a human-readable and editable file representation, making it difficult for users to easily modify the computation graph or add custom operators.

|

||||

2. The operator definition of ONNX is not completely in accordance with PyTorch. When exporting some PyTorch operators, glue operators are often added passively by ONNX, which makes the computation graph inconsistent with PyTorch and may impact the inference efficiency.

|

||||

3. There are a large number of additional parameters designed to be compatible with various ML frameworks in the operator definition in ONNX. These parameters increase the burden of inference implementation on hardware and software.

|

||||

|

||||

PNNX tries to define a set of operators and a simple and easy-to-use format that are completely contrasted with the python api of PyTorch, so that the conversion and interoperability of PyTorch models are more convenient.

|

||||

|

||||

# Features

|

||||

|

||||

1. [Human readable and editable format](#the-pnnxparam-format)

|

||||

2. [Plain model binary in storage zip](#the-pnnxbin-format)

|

||||

3. [One-to-one mapping of PNNX operators and PyTorch python api](#pnnx-operator)

|

||||

4. [Preserve math expression as one operator](#pnnx-expression-operator)

|

||||

5. [Preserve torch function as one operator](#pnnx-torch-function-operator)

|

||||

6. [Preserve miscellaneous module as one operator](#pnnx-module-operator)

|

||||

7. [Inference via exported PyTorch python code](#pnnx-python-inference)

|

||||

8. [Tensor shape propagation](#pnnx-shape-propagation)

|

||||

9. [Model optimization](#pnnx-model-optimization)

|

||||

10. [Custom operator support](#pnnx-custom-operator)

|

||||

|

||||

# Build TorchScript to PNNX converter

|

||||

|

||||

1. Install PyTorch and TorchVision c++ library

|

||||

2. Build PNNX with cmake

|

||||

|

||||

# Usage

|

||||

|

||||

1. Export your model to TorchScript

|

||||

|

||||

```python

|

||||

import torch

|

||||

import torchvision.models as models

|

||||

|

||||

net = models.resnet18(pretrained=True)

|

||||

net = net.eval()

|

||||

|

||||

x = torch.rand(1, 3, 224, 224)

|

||||

|

||||

mod = torch.jit.trace(net, x)

|

||||

torch.jit.save(mod, "resnet18.pt")

|

||||

```

|

||||

|

||||

2. Convert TorchScript to PNNX

|

||||

|

||||

```shell

|

||||

pnnx resnet18.pt inputshape=[1,3,224,224]

|

||||

```

|

||||

|

||||

Normally, you will get six files

|

||||

|

||||

```resnet18.pnnx.param``` PNNX graph definition

|

||||

|

||||

```resnet18.pnnx.bin``` PNNX model weight

|

||||

|

||||

```resnet18_pnnx.py``` PyTorch script for inference, the python code for model construction and weight initialization

|

||||

|

||||

```resnet18.ncnn.param``` ncnn graph definition

|

||||

|

||||

```resnet18.ncnn.bin``` ncnn model weight

|

||||

|

||||

```resnet18_ncnn.py``` pyncnn script for inference

|

||||

|

||||

3. Visualize PNNX with Netron

|

||||

|

||||

Open https://netron.app/ in browser, and drag resnet18.pnnx.param into it.

|

||||

|

||||

4. PNNX command line options

|

||||

|

||||

```

|

||||

Usage: pnnx [model.pt] [(key=value)...]

|

||||

pnnxparam=model.pnnx.param

|

||||

pnnxbin=model.pnnx.bin

|

||||

pnnxpy=model_pnnx.py

|

||||

ncnnparam=model.ncnn.param

|

||||

ncnnbin=model.ncnn.bin

|

||||

ncnnpy=model_ncnn.py

|

||||

optlevel=2

|

||||

device=cpu/gpu

|

||||

inputshape=[1,3,224,224],...

|

||||

inputshape2=[1,3,320,320],...

|

||||

customop=/home/nihui/.cache/torch_extensions/fused/fused.so,...

|

||||

moduleop=models.common.Focus,models.yolo.Detect,...

|

||||

Sample usage: pnnx mobilenet_v2.pt inputshape=[1,3,224,224]

|

||||

pnnx yolov5s.pt inputshape=[1,3,640,640] inputshape2=[1,3,320,320] device=gpu moduleop=models.common.Focus,models.yolo.Detect

|

||||

```

|

||||

|

||||

Parameters:

|

||||

|

||||

`pnnxparam` (default="*.pnnx.param", * is the model name): PNNX graph definition file

|

||||

|

||||

`pnnxbin` (default="*.pnnx.bin"): PNNX model weight

|

||||

|

||||

`pnnxpy` (default="*_pnnx.py"): PyTorch script for inference, including model construction and weight initialization code

|

||||

|

||||

`ncnnparam` (default="*.ncnn.param"): ncnn graph definition

|

||||

|

||||

`ncnnbin` (default="*.ncnn.bin"): ncnn model weight

|

||||

|

||||

`ncnnpy` (default="*_ncnn.py"): pyncnn script for inference

|

||||

|

||||

`optlevel` (default=2): graph optimization level

|

||||

|

||||

| Option | Optimization level |

|

||||

|--------|---------------------------------|

|

||||

| 0 | do not apply optimization |

|

||||

| 1 | optimization for inference |

|

||||

| 2 | optimization more for inference |

|

||||

|

||||

`device` (default="cpu"): device type for the input in TorchScript model, cpu or gpu

|

||||

|

||||

`inputshape` (Optional): shapes of model inputs. It is used to resolve tensor shapes in model graph. for example, `[1,3,224,224]` for the model with only 1 input, `[1,3,224,224],[1,3,224,224]` for the model that have 2 inputs.

|

||||

|

||||

`inputshape2` (Optional): shapes of alternative model inputs, the format is identical to `inputshape`. Usually, it is used with `inputshape` to resolve dynamic shape (-1) in model graph.

|

||||

|

||||

`customop` (Optional): list of Torch extensions (dynamic library) for custom operators, separated by ",". For example, `/home/nihui/.cache/torch_extensions/fused/fused.so,...`

|

||||

|

||||

`moduleop` (Optional): list of modules to keep as one big operator, separated by ",". for example, `models.common.Focus,models.yolo.Detect`

|

||||

|

||||

# The pnnx.param format

|

||||

|

||||

### example

|

||||

```

|

||||

7767517

|

||||

4 3

|

||||

pnnx.Input input 0 1 0

|

||||

nn.Conv2d conv_0 1 1 0 1 bias=1 dilation=(1,1) groups=1 in_channels=12 kernel_size=(3,3) out_channels=16 padding=(0,0) stride=(1,1) @bias=(16)f32 @weight=(16,12,3,3)f32

|

||||

nn.Conv2d conv_1 1 1 1 2 bias=1 dilation=(1,1) groups=1 in_channels=16 kernel_size=(2,2) out_channels=20 padding=(2,2) stride=(2,2) @bias=(20)f32 @weight=(20,16,2,2)f32

|

||||

pnnx.Output output 1 0 2

|

||||

```

|

||||

### overview

|

||||

```

|

||||

[magic]

|

||||

```

|

||||

* magic number : 7767517

|

||||

```

|

||||

[operator count] [operand count]

|

||||

```

|

||||

* operator count : count of the operator line follows

|

||||

* operand count : count of all operands

|

||||

### operator line

|

||||

```

|

||||

[type] [name] [input count] [output count] [input operands] [output operands] [operator params]

|

||||

```

|

||||

* type : type name, such as Conv2d ReLU etc

|

||||

* name : name of this operator

|

||||

* input count : count of the operands this operator needs as input

|

||||

* output count : count of the operands this operator produces as output

|

||||

* input operands : name list of all the input blob names, separated by space

|

||||

* output operands : name list of all the output blob names, separated by space

|

||||

* operator params : key=value pair list, separated by space, operator weights are prefixed by ```@``` symbol, tensor shapes are prefixed by ```#``` symbol, input parameter keys are prefixed by ```$```

|

||||

|

||||

# The pnnx.bin format

|

||||

|

||||

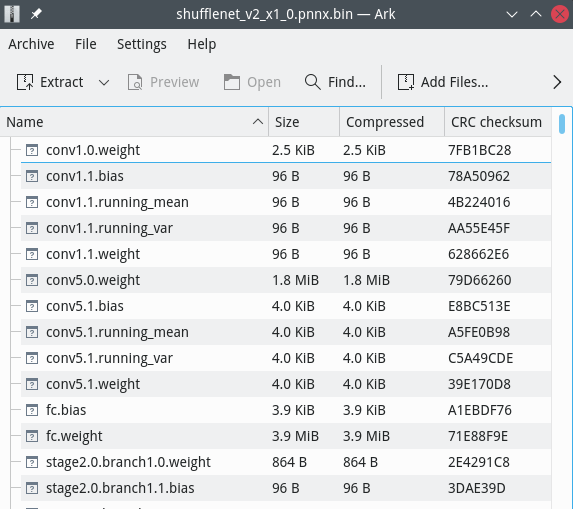

pnnx.bin file is a zip file with store-only mode(no compression)

|

||||

|

||||

weight binary file has its name composed by operator name and weight name

|

||||

|

||||

For example, ```nn.Conv2d conv_0 1 1 0 1 bias=1 dilation=(1,1) groups=1 in_channels=12 kernel_size=(3,3) out_channels=16 padding=(0,0) stride=(1,1) @bias=(16) @weight=(16,12,3,3)``` would pull conv_0.weight and conv_0.bias into pnnx.bin zip archive.

|

||||

|

||||

weight binaries can be listed or modified with any archive application eg. 7zip

|

||||

|

||||

|

||||

|

||||

# PNNX operator

|

||||

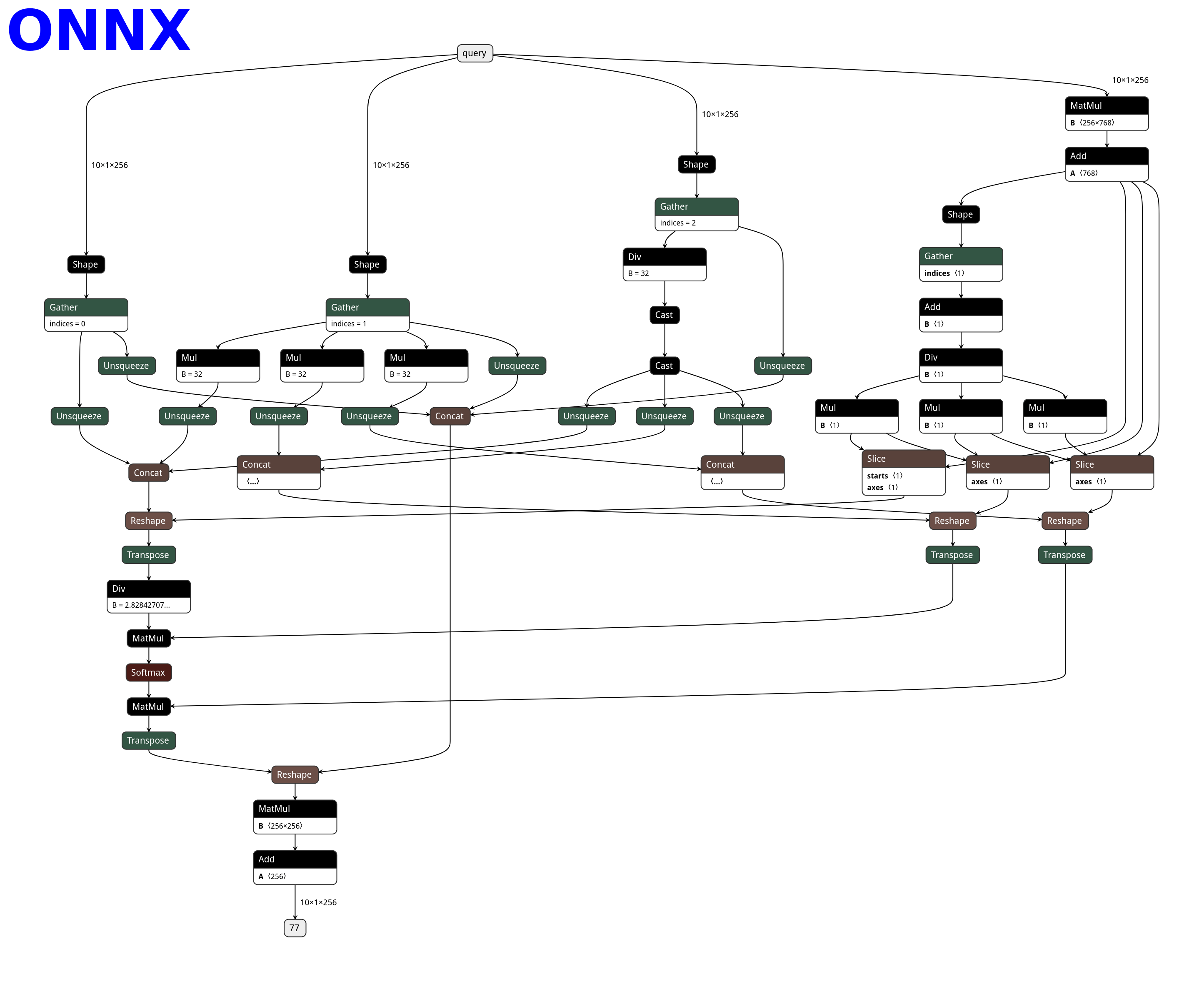

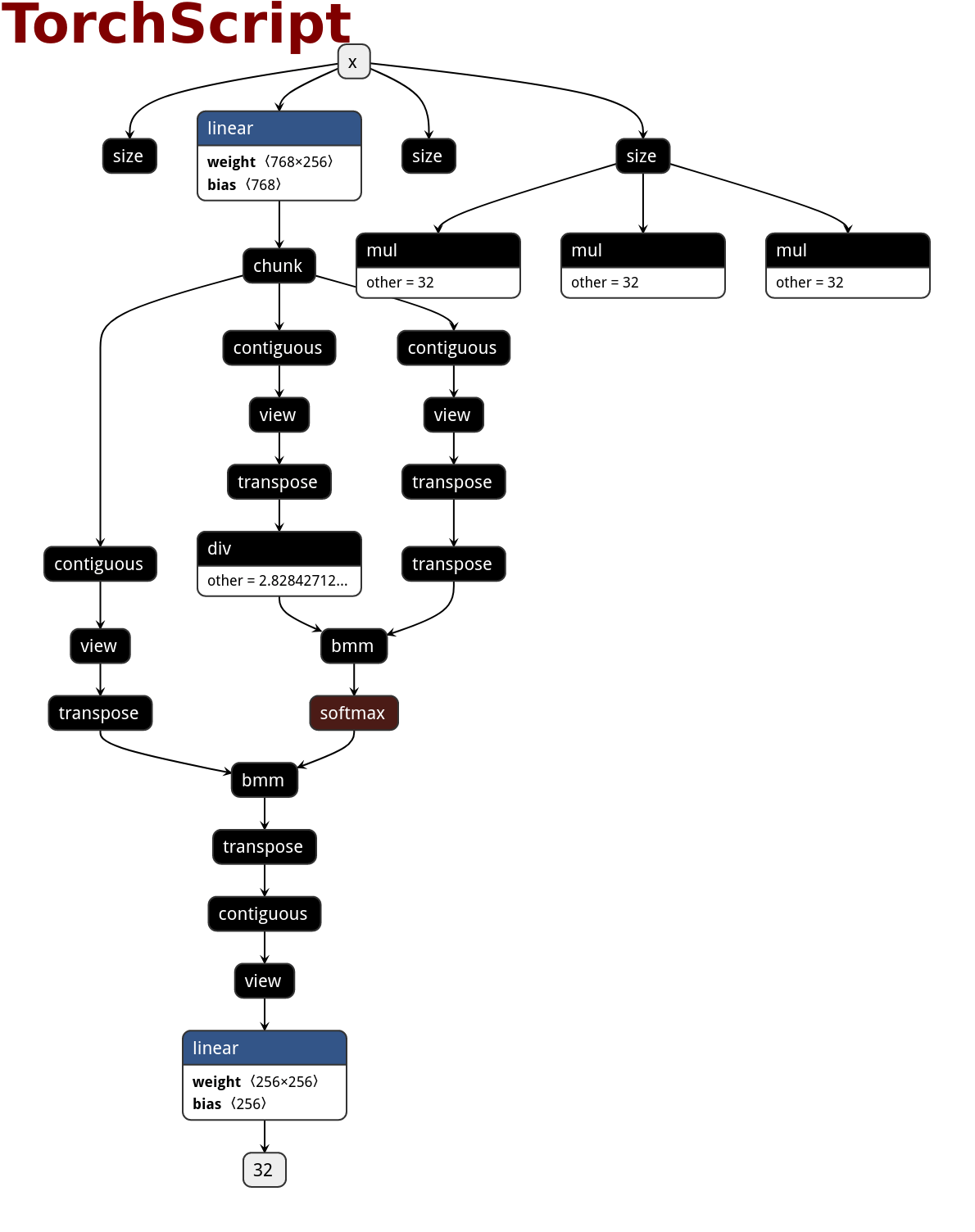

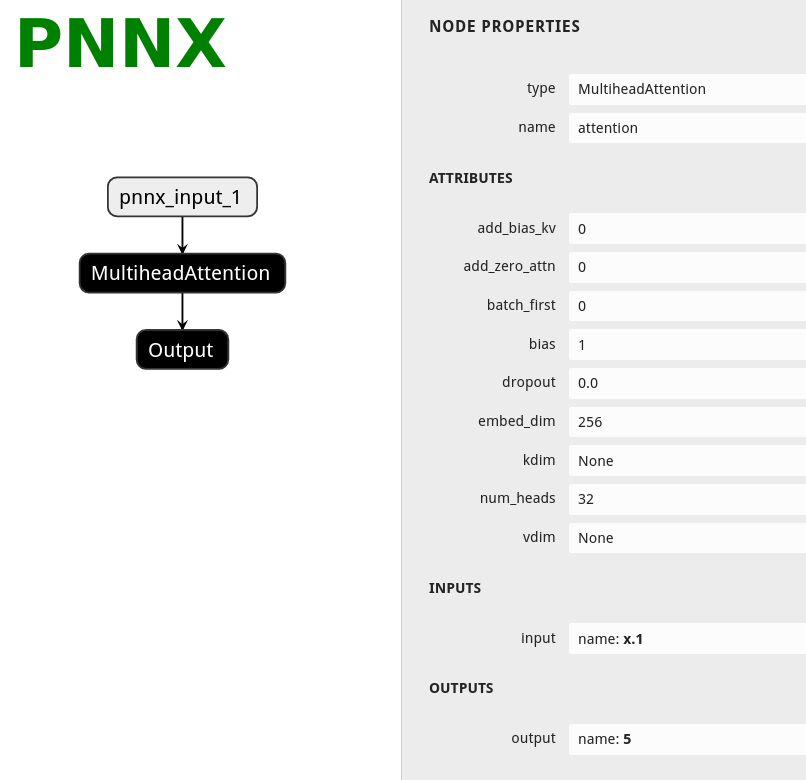

PNNX always preserve operators from what PyTorch python api provides.

|

||||

|

||||

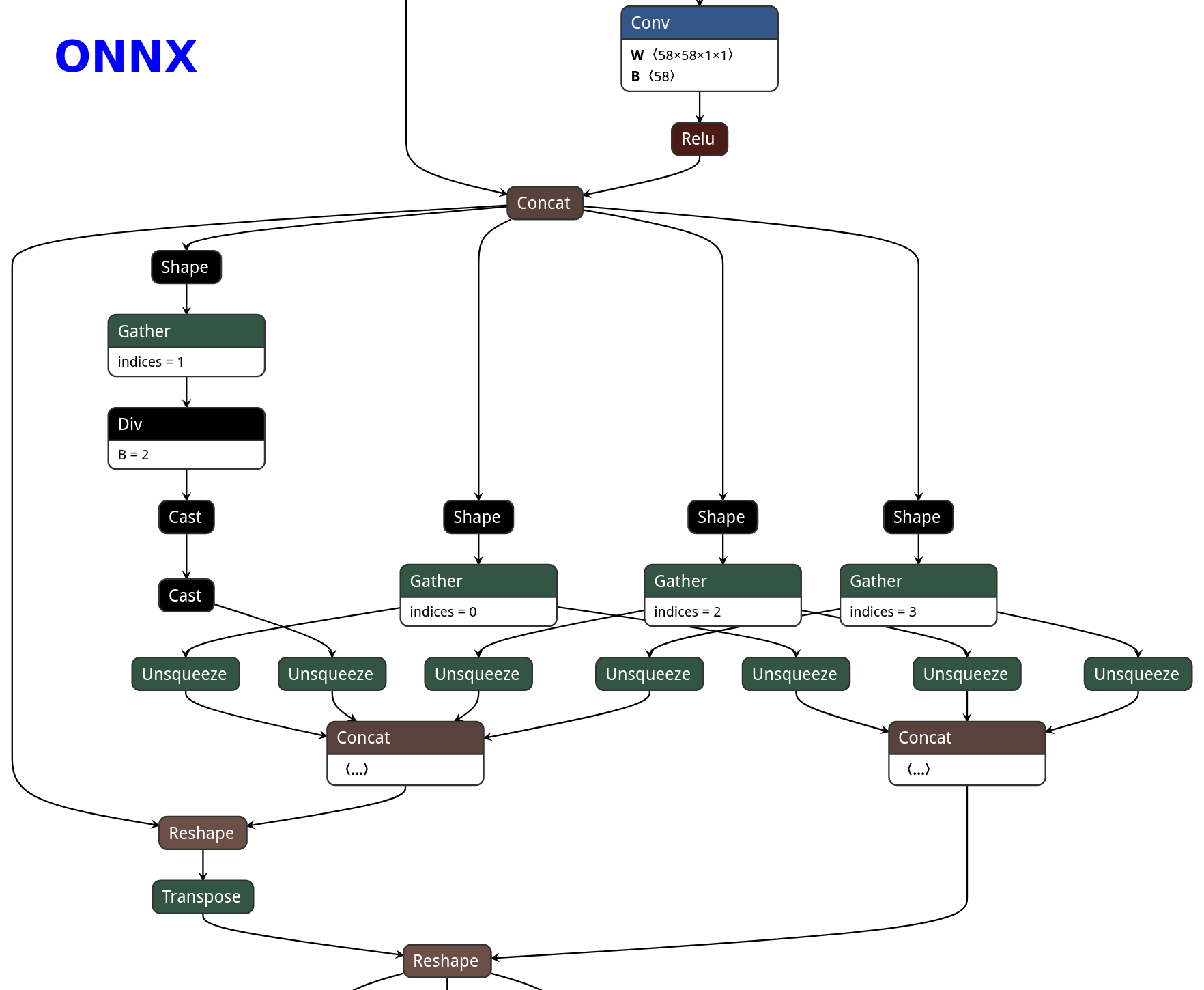

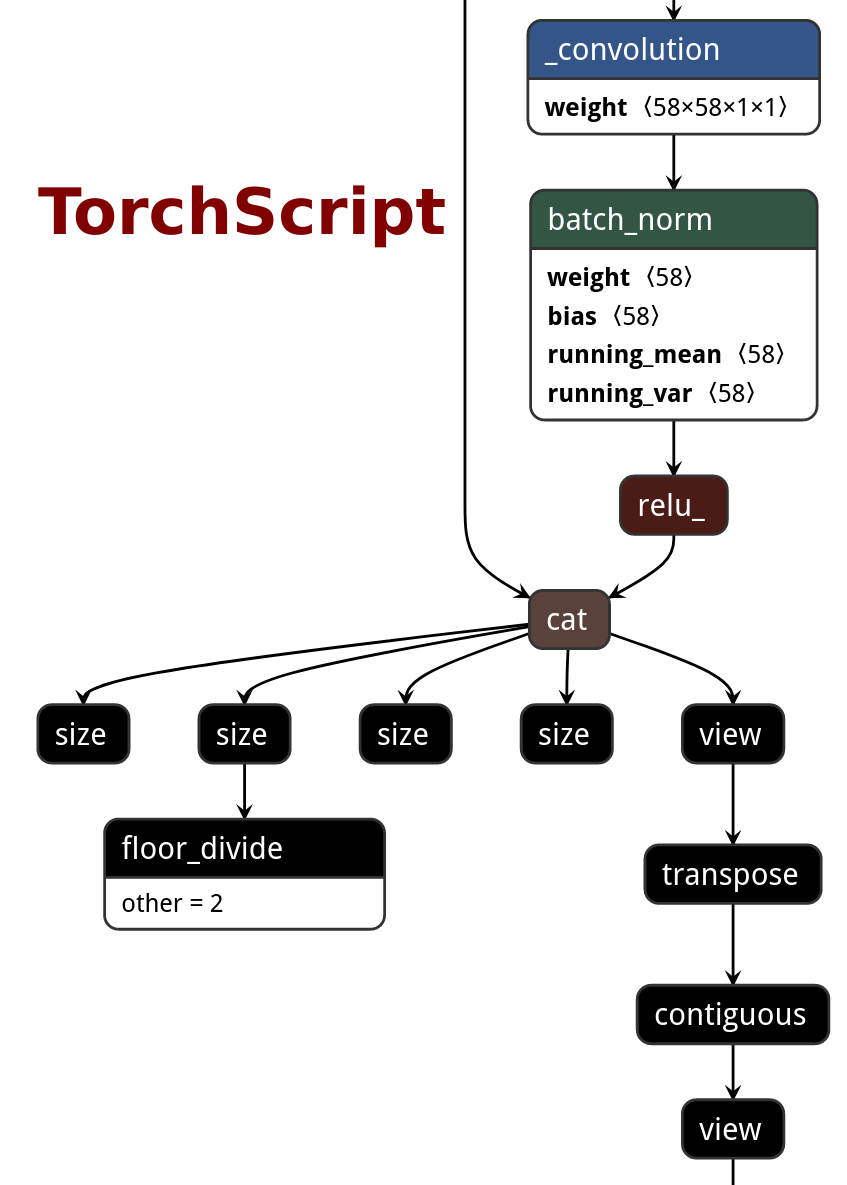

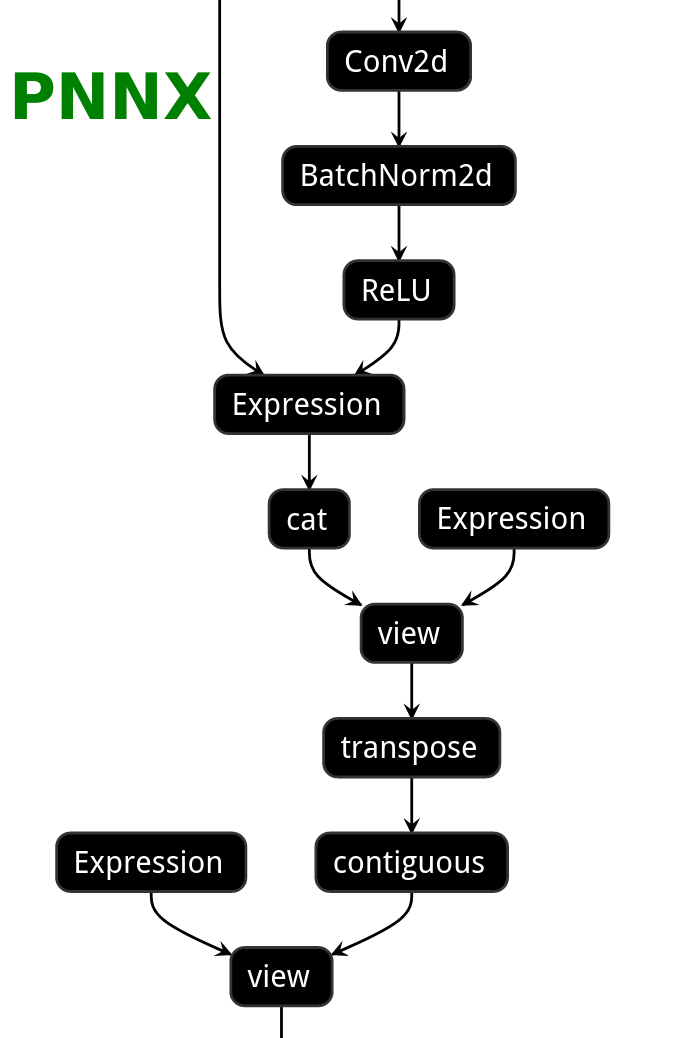

Here is the netron visualization comparision among ONNX, TorchScript and PNNX with the original PyTorch python code shown.

|

||||

|

||||

```python

|

||||

import torch

|

||||

import torch.nn as nn

|

||||

|

||||

class Model(nn.Module):

|

||||

def __init__(self):

|

||||

super(Model, self).__init__()

|

||||

|

||||

self.attention = nn.MultiheadAttention(embed_dim=256, num_heads=32)

|

||||

|

||||

def forward(self, x):

|

||||

x, _ = self.attention(x, x, x)

|

||||

return x

|

||||

```

|

||||

|

||||

|ONNX|TorchScript|PNNX|

|

||||

|----|---|---|

|

||||

||||

|

||||

|

||||

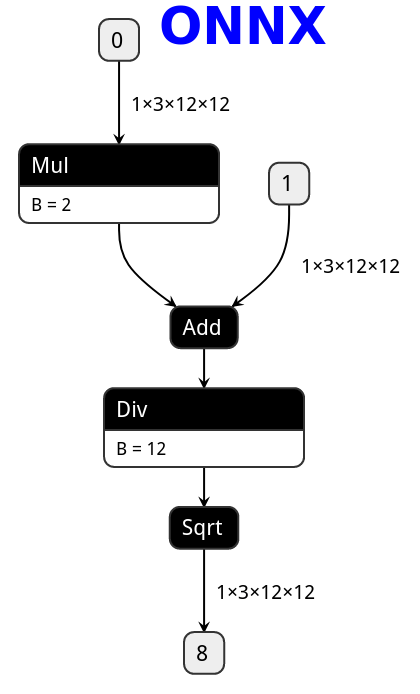

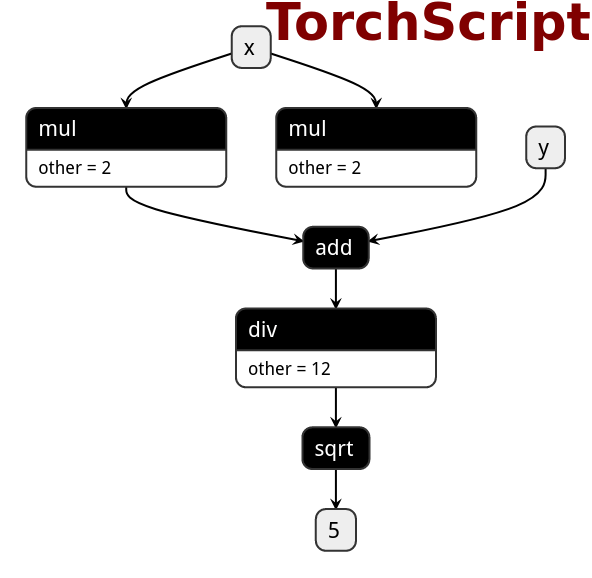

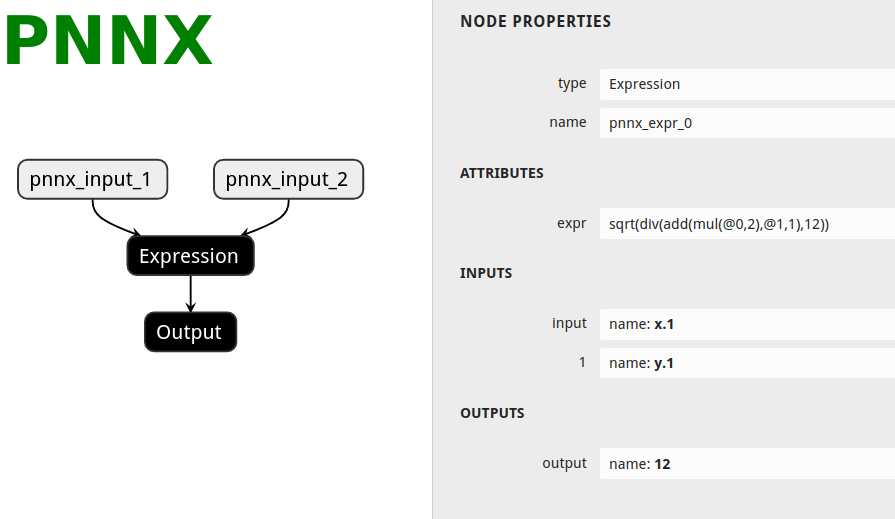

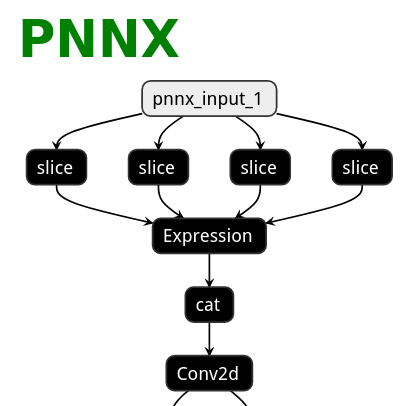

# PNNX expression operator

|

||||

PNNX trys to preserve expression from what PyTorch python code writes.

|

||||

|

||||

Here is the netron visualization comparision among ONNX, TorchScript and PNNX with the original PyTorch python code shown.

|

||||

|

||||

```python

|

||||

import torch

|

||||

|

||||

def foo(x, y):

|

||||

return torch.sqrt((2 * x + y) / 12)

|

||||

```

|

||||

|

||||

|ONNX|TorchScript|PNNX|

|

||||

|---|---|---|

|

||||

||||

|

||||

|

||||

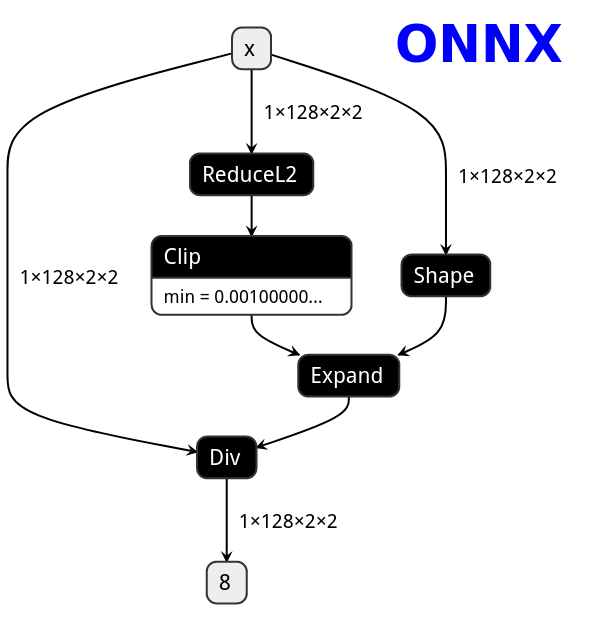

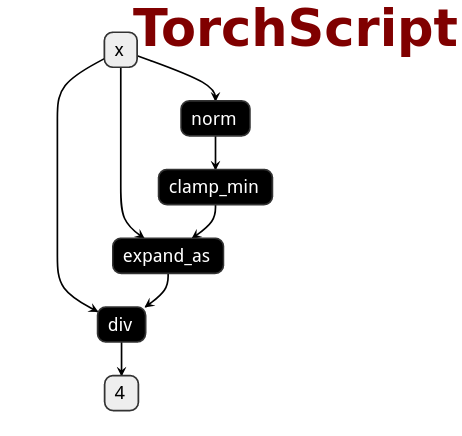

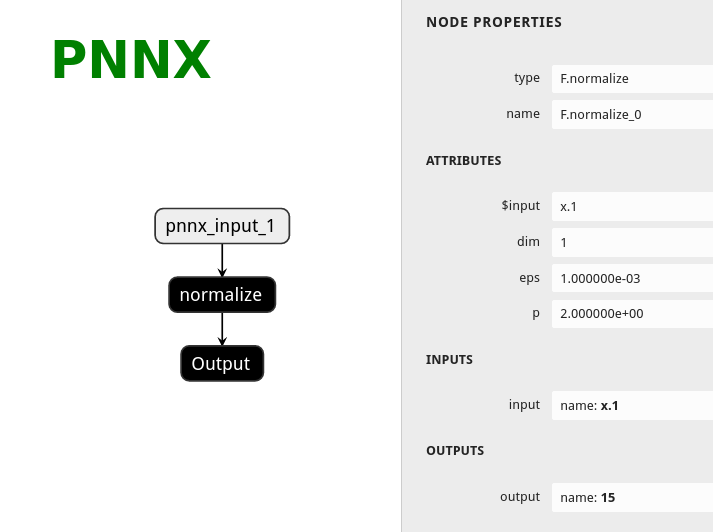

# PNNX torch function operator

|

||||

PNNX trys to preserve torch functions and Tensor member functions as one operator from what PyTorch python api provides.

|

||||

|

||||

Here is the netron visualization comparision among ONNX, TorchScript and PNNX with the original PyTorch python code shown.

|

||||

|

||||

```python

|

||||

import torch

|

||||

import torch.nn.functional as F

|

||||

|

||||

class Model(nn.Module):

|

||||

def __init__(self):

|

||||

super(Model, self).__init__()

|

||||

|

||||

def forward(self, x):

|

||||

x = F.normalize(x, eps=1e-3)

|

||||

return x

|

||||

```

|

||||

|

||||

|ONNX|TorchScript|PNNX|

|

||||

|---|---|---|

|

||||

||||

|

||||

|

||||

|

||||

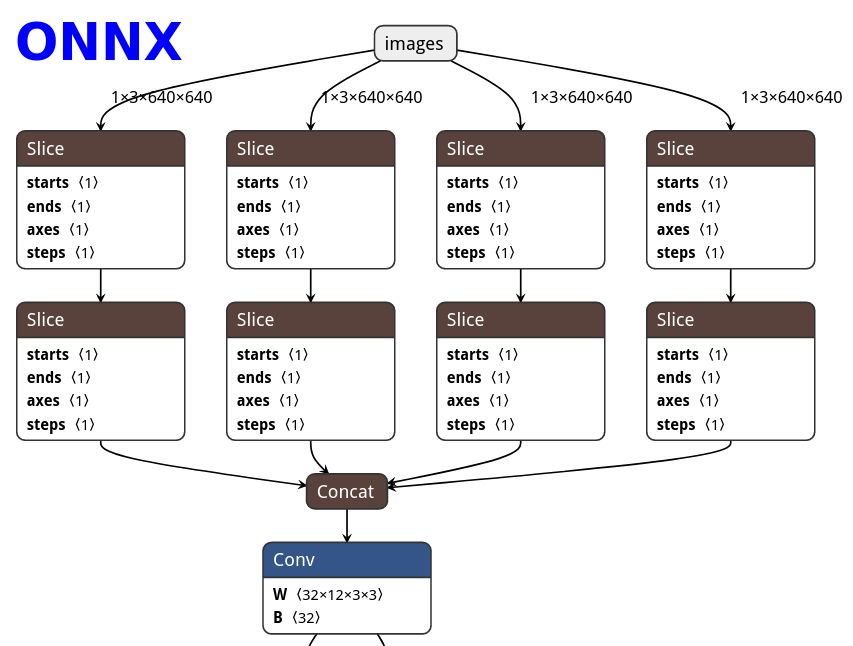

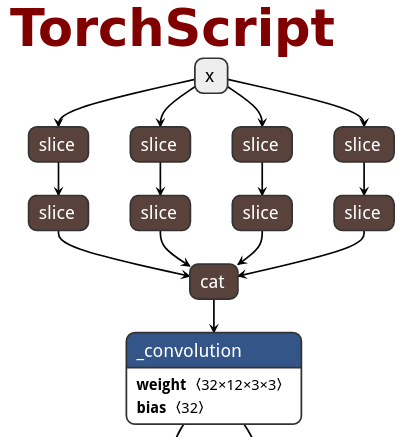

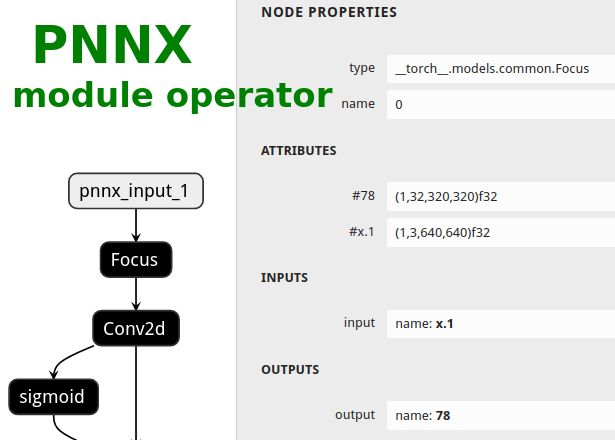

# PNNX module operator

|

||||

Users could ask PNNX to keep module as one big operator when it has complex logic.

|

||||

|

||||

The process is optional and could be enabled via moduleop command line option.

|

||||

|

||||

After pass_level0, all modules will be presented in terminal output, then you can pick the intersting ones as module operators.

|

||||

```

|

||||

############# pass_level0

|

||||

inline module = models.common.Bottleneck

|

||||

inline module = models.common.C3

|

||||

inline module = models.common.Concat

|

||||

inline module = models.common.Conv

|

||||

inline module = models.common.Focus

|

||||

inline module = models.common.SPP

|

||||

inline module = models.yolo.Detect

|

||||

inline module = utils.activations.SiLU

|

||||

```

|

||||

|

||||

```bash

|

||||

pnnx yolov5s.pt inputshape=[1,3,640,640] moduleop=models.common.Focus,models.yolo.Detect

|

||||

```

|

||||

|

||||

Here is the netron visualization comparision among ONNX, TorchScript and PNNX with the original PyTorch python code shown.

|

||||

|

||||

```python

|

||||

import torch

|

||||

import torch.nn as nn

|

||||

|

||||

class Focus(nn.Module):

|

||||

# Focus wh information into c-space

|

||||

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

|

||||

super().__init__()

|

||||

self.conv = Conv(c1 * 4, c2, k, s, p, g, act)

|

||||

|

||||

def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)

|

||||

return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))

|

||||

```

|

||||

|

||||

|ONNX|TorchScript|PNNX|PNNX with module operator|

|

||||

|---|---|---|---|

|

||||

|||||

|

||||

|

||||

|

||||

# PNNX python inference

|

||||

|

||||

A python script will be generated by default when converting torchscript to pnnx.

|

||||

|

||||

This script is the python code representation of PNNX and can be used for model inference.

|

||||

|

||||

There are some utility functions for loading weight binary from pnnx.bin.

|

||||

|

||||

You can even export the model torchscript AGAIN from this generated code!

|

||||

|

||||

```python

|

||||

import torch

|

||||

import torch.nn as nn

|

||||

import torch.nn.functional as F

|

||||

|

||||

class Model(nn.Module):

|

||||

def __init__(self):

|

||||

super(Model, self).__init__()

|

||||

|

||||

self.linear_0 = nn.Linear(in_features=128, out_features=256, bias=True)

|

||||

self.linear_1 = nn.Linear(in_features=256, out_features=4, bias=True)

|

||||

|

||||

def forward(self, x):

|

||||

x = self.linear_0(x)

|

||||

x = F.leaky_relu(x, 0.15)

|

||||

x = self.linear_1(x)

|

||||

return x

|

||||

```

|

||||

|

||||

```python

|

||||

import os

|

||||

import numpy as np

|

||||

import tempfile, zipfile

|

||||

import torch

|

||||

import torch.nn as nn

|

||||

import torch.nn.functional as F

|

||||

|

||||

class Model(nn.Module):

|

||||

def __init__(self):

|

||||

super(Model, self).__init__()

|

||||

|

||||

self.linear_0 = nn.Linear(bias=True, in_features=128, out_features=256)

|

||||

self.linear_1 = nn.Linear(bias=True, in_features=256, out_features=4)

|

||||

|

||||

archive = zipfile.ZipFile('../../function.pnnx.bin', 'r')

|

||||

self.linear_0.bias = self.load_pnnx_bin_as_parameter(archive, 'linear_0.bias', (256), 'float32')

|

||||

self.linear_0.weight = self.load_pnnx_bin_as_parameter(archive, 'linear_0.weight', (256,128), 'float32')

|

||||

self.linear_1.bias = self.load_pnnx_bin_as_parameter(archive, 'linear_1.bias', (4), 'float32')

|

||||

self.linear_1.weight = self.load_pnnx_bin_as_parameter(archive, 'linear_1.weight', (4,256), 'float32')

|

||||

archive.close()

|

||||

|

||||

def load_pnnx_bin_as_parameter(self, archive, key, shape, dtype):

|

||||

return nn.Parameter(self.load_pnnx_bin_as_tensor(archive, key, shape, dtype))

|

||||

|

||||

def load_pnnx_bin_as_tensor(self, archive, key, shape, dtype):

|

||||

_, tmppath = tempfile.mkstemp()

|

||||

tmpf = open(tmppath, 'wb')

|

||||

with archive.open(key) as keyfile:

|

||||

tmpf.write(keyfile.read())

|

||||

tmpf.close()

|

||||

m = np.memmap(tmppath, dtype=dtype, mode='r', shape=shape).copy()

|

||||

os.remove(tmppath)

|

||||

return torch.from_numpy(m)

|

||||

|

||||

def forward(self, v_x_1):

|

||||

v_7 = self.linear_0(v_x_1)

|

||||

v_input_1 = F.leaky_relu(input=v_7, negative_slope=0.150000)

|

||||

v_12 = self.linear_1(v_input_1)

|

||||

return v_12

|

||||

```

|

||||

|

||||

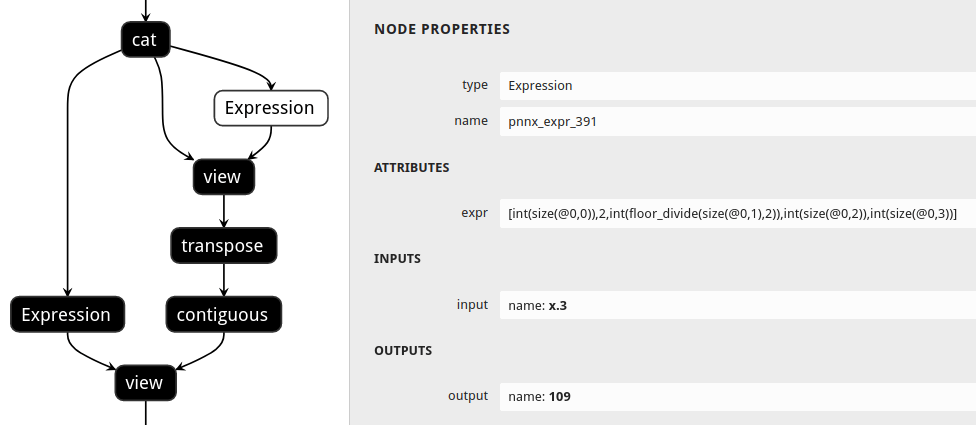

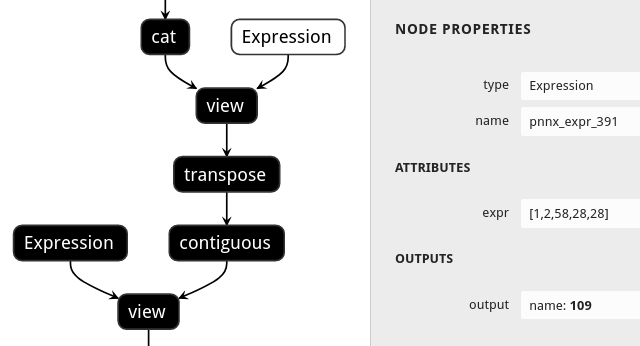

# PNNX shape propagation

|

||||

Users could ask PNNX to resolve all tensor shapes in model graph and constify some common expressions involved when tensor shapes are known.

|

||||

|

||||

The process is optional and could be enabled via inputshape command line option.

|

||||

|

||||

```bash

|

||||

pnnx shufflenet_v2_x1_0.pt inputshape=[1,3,224,224]

|

||||

```

|

||||

|

||||

```python

|

||||

def channel_shuffle(x: Tensor, groups: int) -> Tensor:

|

||||

batchsize, num_channels, height, width = x.size()

|

||||

channels_per_group = num_channels // groups

|

||||

|

||||

# reshape

|

||||

x = x.view(batchsize, groups, channels_per_group, height, width)

|

||||

|

||||

x = torch.transpose(x, 1, 2).contiguous()

|

||||

|

||||

# flatten

|

||||

x = x.view(batchsize, -1, height, width)

|

||||

|

||||

return x

|

||||

```

|

||||

|

||||

|without shape propagation|with shape propagation|

|

||||

|---|---|

|

||||

|||

|

||||

|

||||

|

||||

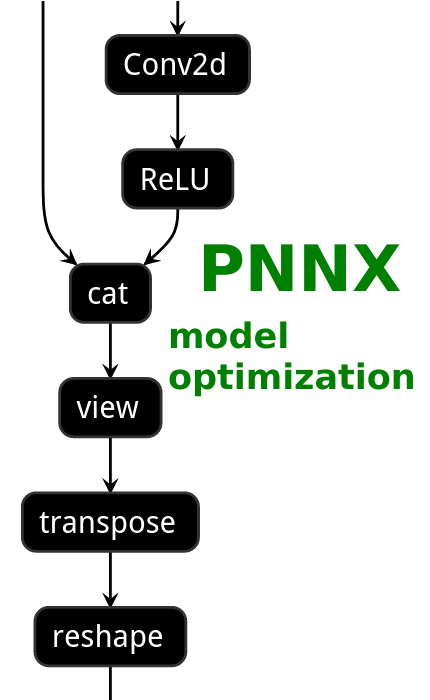

# PNNX model optimization

|

||||

|

||||

|ONNX|TorchScript|PNNX without optimization|PNNX with optimization|

|

||||

|---|---|---|---|

|

||||

|||||

|

||||

|

||||

|

||||

# PNNX custom operator

|

||||

|

||||

```python

|

||||

import os

|

||||

|

||||

import torch

|

||||

from torch.autograd import Function

|

||||

from torch.utils.cpp_extension import load, _import_module_from_library

|

||||

|

||||

module_path = os.path.dirname(__file__)

|

||||

upfirdn2d_op = load(

|

||||

'upfirdn2d',

|

||||

sources=[

|

||||

os.path.join(module_path, 'upfirdn2d.cpp'),

|

||||

os.path.join(module_path, 'upfirdn2d_kernel.cu'),

|

||||

],

|

||||

is_python_module=False

|

||||

)

|

||||

|

||||

def upfirdn2d(input, kernel, up=1, down=1, pad=(0, 0)):

|

||||

pad_x0 = pad[0]

|

||||

pad_x1 = pad[1]

|

||||

pad_y0 = pad[0]

|

||||

pad_y1 = pad[1]

|

||||

|

||||

kernel_h, kernel_w = kernel.shape

|

||||

batch, channel, in_h, in_w = input.shape

|

||||

|

||||

input = input.reshape(-1, in_h, in_w, 1)

|

||||

|

||||

out_h = (in_h * up + pad_y0 + pad_y1 - kernel_h) // down + 1

|

||||

out_w = (in_w * up + pad_x0 + pad_x1 - kernel_w) // down + 1

|

||||

|

||||

out = torch.ops.upfirdn2d_op.upfirdn2d(input, kernel, up, up, down, down, pad_x0, pad_x1, pad_y0, pad_y1)

|

||||

|

||||

out = out.view(-1, channel, out_h, out_w)

|

||||

|

||||

return out

|

||||

```

|

||||

|

||||

```cpp

|

||||

#include <torch/extension.h>

|

||||

|

||||

torch::Tensor upfirdn2d(const torch::Tensor& input, const torch::Tensor& kernel,

|

||||

int64_t up_x, int64_t up_y, int64_t down_x, int64_t down_y,

|

||||

int64_t pad_x0, int64_t pad_x1, int64_t pad_y0, int64_t pad_y1) {

|

||||

// operator body

|

||||

}

|

||||

|

||||

TORCH_LIBRARY(upfirdn2d_op, m) {

|

||||

m.def("upfirdn2d", upfirdn2d);

|

||||

}

|

||||

```

|

||||

|

||||

<img src="https://raw.githubusercontent.com/nihui/ncnn-assets/master/pnnx/customop.pnnx.png" width="400" />

|

||||

|

||||

# Supported PyTorch operator status

|

||||

|

||||

| torch.nn | Is Supported | Export to ncnn |

|

||||

|---------------------------|----|---|

|

||||

|nn.AdaptiveAvgPool1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.AdaptiveAvgPool2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.AdaptiveAvgPool3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.AdaptiveMaxPool1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.AdaptiveMaxPool2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.AdaptiveMaxPool3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.AlphaDropout | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.AvgPool1d | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|nn.AvgPool2d | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|nn.AvgPool3d | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|nn.BatchNorm1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.BatchNorm2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.BatchNorm3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Bilinear | |

|

||||

|nn.CELU | :heavy_check_mark: |

|

||||

|nn.ChannelShuffle | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ConstantPad1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ConstantPad2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ConstantPad3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Conv1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Conv2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Conv3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ConvTranspose1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ConvTranspose2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ConvTranspose3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.CosineSimilarity | |

|

||||

|nn.Dropout | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Dropout2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Dropout3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ELU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Embedding | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.EmbeddingBag | |

|

||||

|nn.Flatten | :heavy_check_mark: |

|

||||

|nn.Fold | |

|

||||

|nn.FractionalMaxPool2d | |

|

||||

|nn.FractionalMaxPool3d | |

|

||||

|nn.GELU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.GroupNorm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.GRU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.GRUCell | |

|

||||

|nn.Hardshrink | :heavy_check_mark: |

|

||||

|nn.Hardsigmoid | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Hardswish | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Hardtanh | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Identity | |

|

||||

|nn.InstanceNorm1d | :heavy_check_mark: |

|

||||

|nn.InstanceNorm2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.InstanceNorm3d | :heavy_check_mark: |

|

||||

|nn.LayerNorm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.LazyBatchNorm1d | |

|

||||

|nn.LazyBatchNorm2d | |

|

||||

|nn.LazyBatchNorm3d | |

|

||||

|nn.LazyConv1d | |

|

||||

|nn.LazyConv2d | |

|

||||

|nn.LazyConv3d | |

|

||||

|nn.LazyConvTranspose1d | |

|

||||

|nn.LazyConvTranspose2d | |

|

||||

|nn.LazyConvTranspose3d | |

|

||||

|nn.LazyLinear | |

|

||||

|nn.LeakyReLU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Linear | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.LocalResponseNorm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.LogSigmoid | :heavy_check_mark: |

|

||||

|nn.LogSoftmax | :heavy_check_mark: |

|

||||

|nn.LPPool1d | :heavy_check_mark: |

|

||||

|nn.LPPool2d | :heavy_check_mark: |

|

||||

|nn.LSTM | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.LSTMCell | |

|

||||

|nn.MaxPool1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.MaxPool2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.MaxPool3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.MaxUnpool1d | |

|

||||

|nn.MaxUnpool2d | |

|

||||

|nn.MaxUnpool3d | |

|

||||

|nn.Mish | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.MultiheadAttention | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|nn.PairwiseDistance | |

|

||||

|nn.PixelShuffle | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.PixelUnshuffle | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.PReLU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ReflectionPad1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ReflectionPad2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ReLU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ReLU6 | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ReplicationPad1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ReplicationPad2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ReplicationPad3d | :heavy_check_mark: |

|

||||

|nn.RNN | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|nn.RNNBase | |

|

||||

|nn.RNNCell | |

|

||||

|nn.RReLU | :heavy_check_mark: |

|

||||

|nn.SELU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Sigmoid | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.SiLU | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Softmax | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Softmax2d | |

|

||||

|nn.Softmin | :heavy_check_mark: |

|

||||

|nn.Softplus | :heavy_check_mark: |

|

||||

|nn.Softshrink | :heavy_check_mark: |

|

||||

|nn.Softsign | :heavy_check_mark: |

|

||||

|nn.SyncBatchNorm | |

|

||||

|nn.Tanh | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.Tanhshrink | :heavy_check_mark: |

|

||||

|nn.Threshold | :heavy_check_mark: |

|

||||

|nn.Transformer | |

|

||||

|nn.TransformerDecoder | |

|

||||

|nn.TransformerDecoderLayer | |

|

||||

|nn.TransformerEncoder | |

|

||||

|nn.TransformerEncoderLayer | |

|

||||

|nn.Unflatten | |

|

||||

|nn.Unfold | |

|

||||

|nn.Upsample | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.UpsamplingBilinear2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.UpsamplingNearest2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|nn.ZeroPad2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|

||||

|

||||

| torch.nn.functional | Is Supported | Export to ncnn |

|

||||

|---------------------------|----|----|

|

||||

|F.adaptive_avg_pool1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.adaptive_avg_pool2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.adaptive_avg_pool3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.adaptive_max_pool1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.adaptive_max_pool2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.adaptive_max_pool3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.affine_grid | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.alpha_dropout | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.avg_pool1d | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|F.avg_pool2d | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|F.avg_pool3d | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|F.batch_norm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.bilinear | |

|

||||

|F.celu | :heavy_check_mark: |

|

||||

|F.conv1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.conv2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.conv3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.conv_transpose1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.conv_transpose2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.conv_transpose3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.cosine_similarity | |

|

||||

|F.dropout | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.dropout2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.dropout3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.elu | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.elu_ | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.embedding | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.embedding_bag | |

|

||||

|F.feature_alpha_dropout | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.fold | |

|

||||

|F.fractional_max_pool2d | |

|

||||

|F.fractional_max_pool3d | |

|

||||

|F.gelu | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.glu | |

|

||||

|F.grid_sample | :heavy_check_mark: |

|

||||

|F.group_norm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.gumbel_softmax | |

|

||||

|F.hardshrink | :heavy_check_mark: |

|

||||

|F.hardsigmoid | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.hardswish | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.hardtanh | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.hardtanh_ | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.instance_norm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.interpolate | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.layer_norm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.leaky_relu | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.leaky_relu_ | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.linear | :heavy_check_mark: | :heavy_check_mark:* |

|

||||

|F.local_response_norm | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.logsigmoid | :heavy_check_mark: |

|

||||

|F.log_softmax | :heavy_check_mark: |

|

||||

|F.lp_pool1d | :heavy_check_mark: |

|

||||

|F.lp_pool2d | :heavy_check_mark: |

|

||||

|F.max_pool1d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.max_pool2d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.max_pool3d | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.max_unpool1d | |

|

||||

|F.max_unpool2d | |

|

||||

|F.max_unpool3d | |

|

||||

|F.mish | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.normalize | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.one_hot | |

|

||||

|F.pad | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.pairwise_distance | |

|

||||

|F.pdist | |

|

||||

|F.pixel_shuffle | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.pixel_unshuffle | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.prelu | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.relu | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.relu_ | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.relu6 | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.rrelu | :heavy_check_mark: |

|

||||

|F.rrelu_ | :heavy_check_mark: |

|

||||

|F.selu | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.sigmoid | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.silu | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.softmax | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.softmin | :heavy_check_mark: |

|

||||

|F.softplus | :heavy_check_mark: |

|

||||

|F.softshrink | :heavy_check_mark: |

|

||||

|F.softsign | :heavy_check_mark: |

|

||||

|F.tanh | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.tanhshrink | :heavy_check_mark: |

|

||||

|F.threshold | :heavy_check_mark: |

|

||||

|F.threshold_ | :heavy_check_mark: |

|

||||

|F.unfold | |

|

||||

|F.upsample | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.upsample_bilinear | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|F.upsample_nearest | :heavy_check_mark: | :heavy_check_mark: |

|

||||

32

3rdparty/ncnn/tools/pnnx/cmake/PNNXPyTorch.cmake

vendored

Normal file

32

3rdparty/ncnn/tools/pnnx/cmake/PNNXPyTorch.cmake

vendored

Normal file

@@ -0,0 +1,32 @@

|

||||

# reference to https://github.com/llvm/torch-mlir/blob/main/python/torch_mlir/dialects/torch/importer/jit_ir/cmake/modules/TorchMLIRPyTorch.cmake

|

||||

|

||||

# PNNXProbeForPyTorchInstall

|

||||

# Attempts to find a Torch installation and set the Torch_ROOT variable

|

||||

# based on introspecting the python environment. This allows a subsequent

|

||||

# call to find_package(Torch) to work.

|

||||

function(PNNXProbeForPyTorchInstall)

|

||||

if(Torch_ROOT)

|

||||

message(STATUS "Using cached Torch root = ${Torch_ROOT}")

|

||||

elseif(Torch_INSTALL_DIR)

|

||||

message(STATUS "Using cached Torch install dir = ${Torch_INSTALL_DIR}")

|

||||

set(Torch_DIR "${Torch_INSTALL_DIR}/share/cmake/Torch" CACHE STRING "Torch dir" FORCE)

|

||||

else()

|

||||

#find_package (Python3 COMPONENTS Interpreter Development)

|

||||

find_package (Python3)

|

||||

message(STATUS "Checking for PyTorch using ${Python3_EXECUTABLE} ...")

|

||||

execute_process(

|

||||

COMMAND "${Python3_EXECUTABLE}"

|

||||

-c "import os;import torch;print(torch.utils.cmake_prefix_path, end='')"

|

||||

WORKING_DIRECTORY ${CMAKE_CURRENT_SOURCE_DIR}

|

||||

RESULT_VARIABLE PYTORCH_STATUS

|

||||

OUTPUT_VARIABLE PYTORCH_PACKAGE_DIR)

|

||||

if(NOT PYTORCH_STATUS EQUAL "0")

|

||||

message(STATUS "Unable to 'import torch' with ${Python3_EXECUTABLE} (fallback to explicit config)")

|

||||

return()

|

||||

endif()

|

||||

message(STATUS "Found PyTorch installation at ${PYTORCH_PACKAGE_DIR}")

|

||||

|

||||

set(Torch_ROOT "${PYTORCH_PACKAGE_DIR}" CACHE STRING

|

||||

"Torch configure directory" FORCE)

|

||||

endif()

|

||||

endfunction()

|

||||

488

3rdparty/ncnn/tools/pnnx/src/CMakeLists.txt

vendored

Normal file

488

3rdparty/ncnn/tools/pnnx/src/CMakeLists.txt

vendored

Normal file

@@ -0,0 +1,488 @@

|

||||

|

||||

include_directories(${CMAKE_CURRENT_SOURCE_DIR})

|

||||

|

||||

set(pnnx_pass_level0_SRCS

|

||||

pass_level0/constant_unpooling.cpp

|

||||

pass_level0/inline_block.cpp

|

||||

pass_level0/shape_inference.cpp

|

||||

)

|

||||

|

||||

set(pnnx_pass_level1_SRCS

|

||||

pass_level1/nn_AdaptiveAvgPool1d.cpp

|

||||

pass_level1/nn_AdaptiveAvgPool2d.cpp

|

||||

pass_level1/nn_AdaptiveAvgPool3d.cpp

|

||||

pass_level1/nn_AdaptiveMaxPool1d.cpp

|

||||

pass_level1/nn_AdaptiveMaxPool2d.cpp

|

||||

pass_level1/nn_AdaptiveMaxPool3d.cpp

|

||||

pass_level1/nn_AlphaDropout.cpp

|

||||

pass_level1/nn_AvgPool1d.cpp

|

||||

pass_level1/nn_AvgPool2d.cpp

|

||||

pass_level1/nn_AvgPool3d.cpp

|

||||

pass_level1/nn_BatchNorm1d.cpp

|

||||

pass_level1/nn_BatchNorm2d.cpp

|

||||

pass_level1/nn_BatchNorm3d.cpp

|

||||

pass_level1/nn_CELU.cpp

|

||||

pass_level1/nn_ChannelShuffle.cpp

|

||||

pass_level1/nn_ConstantPad1d.cpp

|

||||

pass_level1/nn_ConstantPad2d.cpp

|

||||

pass_level1/nn_ConstantPad3d.cpp

|

||||

pass_level1/nn_Conv1d.cpp

|

||||

pass_level1/nn_Conv2d.cpp

|

||||

pass_level1/nn_Conv3d.cpp

|

||||

pass_level1/nn_ConvTranspose1d.cpp

|

||||

pass_level1/nn_ConvTranspose2d.cpp

|

||||

pass_level1/nn_ConvTranspose3d.cpp

|

||||

pass_level1/nn_Dropout.cpp

|

||||

pass_level1/nn_Dropout2d.cpp

|

||||

pass_level1/nn_Dropout3d.cpp

|

||||

pass_level1/nn_ELU.cpp

|

||||

pass_level1/nn_Embedding.cpp

|

||||

pass_level1/nn_GELU.cpp

|

||||

pass_level1/nn_GroupNorm.cpp

|

||||

pass_level1/nn_GRU.cpp

|

||||

pass_level1/nn_Hardshrink.cpp

|

||||

pass_level1/nn_Hardsigmoid.cpp

|

||||

pass_level1/nn_Hardswish.cpp

|

||||

pass_level1/nn_Hardtanh.cpp

|

||||

pass_level1/nn_InstanceNorm1d.cpp

|

||||

pass_level1/nn_InstanceNorm2d.cpp

|

||||

pass_level1/nn_InstanceNorm3d.cpp

|

||||

pass_level1/nn_LayerNorm.cpp

|

||||

pass_level1/nn_LeakyReLU.cpp

|

||||

pass_level1/nn_Linear.cpp

|

||||

pass_level1/nn_LocalResponseNorm.cpp

|

||||

pass_level1/nn_LogSigmoid.cpp

|

||||

pass_level1/nn_LogSoftmax.cpp

|

||||

pass_level1/nn_LPPool1d.cpp

|

||||

pass_level1/nn_LPPool2d.cpp

|

||||

pass_level1/nn_LSTM.cpp

|

||||

pass_level1/nn_MaxPool1d.cpp

|

||||

pass_level1/nn_MaxPool2d.cpp

|

||||

pass_level1/nn_MaxPool3d.cpp

|

||||

#pass_level1/nn_maxunpool2d.cpp

|

||||

pass_level1/nn_Mish.cpp

|

||||

pass_level1/nn_MultiheadAttention.cpp

|

||||

pass_level1/nn_PixelShuffle.cpp

|

||||

pass_level1/nn_PixelUnshuffle.cpp

|

||||

pass_level1/nn_PReLU.cpp

|

||||

pass_level1/nn_ReflectionPad1d.cpp

|

||||

pass_level1/nn_ReflectionPad2d.cpp

|

||||

pass_level1/nn_ReLU.cpp

|

||||

pass_level1/nn_ReLU6.cpp

|

||||

pass_level1/nn_ReplicationPad1d.cpp

|

||||

pass_level1/nn_ReplicationPad2d.cpp

|

||||

pass_level1/nn_ReplicationPad3d.cpp

|

||||

pass_level1/nn_RNN.cpp

|

||||

pass_level1/nn_RReLU.cpp

|

||||

pass_level1/nn_SELU.cpp

|

||||

pass_level1/nn_Sigmoid.cpp

|

||||

pass_level1/nn_SiLU.cpp

|

||||

pass_level1/nn_Softmax.cpp

|

||||

pass_level1/nn_Softmin.cpp

|

||||

pass_level1/nn_Softplus.cpp

|

||||

pass_level1/nn_Softshrink.cpp

|

||||

pass_level1/nn_Softsign.cpp

|

||||

pass_level1/nn_Tanh.cpp

|

||||

pass_level1/nn_Tanhshrink.cpp

|

||||

pass_level1/nn_Threshold.cpp

|

||||

pass_level1/nn_Upsample.cpp

|

||||

pass_level1/nn_UpsamplingBilinear2d.cpp

|

||||

pass_level1/nn_UpsamplingNearest2d.cpp

|

||||

pass_level1/nn_ZeroPad2d.cpp

|

||||

|

||||

pass_level1/nn_quantized_Conv2d.cpp

|

||||

pass_level1/nn_quantized_DeQuantize.cpp

|

||||

pass_level1/nn_quantized_Linear.cpp

|

||||

pass_level1/nn_quantized_Quantize.cpp

|

||||

|

||||

pass_level1/torchvision_DeformConv2d.cpp

|

||||

pass_level1/torchvision_RoIAlign.cpp

|

||||

)

|

||||

|

||||

set(pnnx_pass_level2_SRCS

|

||||

pass_level2/F_adaptive_avg_pool1d.cpp

|

||||

pass_level2/F_adaptive_avg_pool2d.cpp

|

||||

pass_level2/F_adaptive_avg_pool3d.cpp

|

||||

pass_level2/F_adaptive_max_pool1d.cpp

|

||||

pass_level2/F_adaptive_max_pool2d.cpp

|

||||

pass_level2/F_adaptive_max_pool3d.cpp

|

||||

pass_level2/F_alpha_dropout.cpp

|

||||

pass_level2/F_affine_grid.cpp

|

||||

pass_level2/F_avg_pool1d.cpp

|

||||

pass_level2/F_avg_pool2d.cpp

|

||||

pass_level2/F_avg_pool3d.cpp

|

||||

pass_level2/F_batch_norm.cpp

|

||||

pass_level2/F_celu.cpp

|

||||

pass_level2/F_conv1d.cpp

|

||||

pass_level2/F_conv2d.cpp

|

||||

pass_level2/F_conv3d.cpp

|

||||

pass_level2/F_conv_transpose123d.cpp

|

||||

pass_level2/F_dropout.cpp

|

||||

pass_level2/F_dropout23d.cpp

|

||||

pass_level2/F_elu.cpp

|

||||

pass_level2/F_embedding.cpp

|

||||

pass_level2/F_feature_alpha_dropout.cpp

|

||||

pass_level2/F_gelu.cpp

|

||||

pass_level2/F_grid_sample.cpp

|

||||

pass_level2/F_group_norm.cpp

|

||||

pass_level2/F_hardshrink.cpp

|

||||

pass_level2/F_hardsigmoid.cpp

|

||||

pass_level2/F_hardswish.cpp

|

||||

pass_level2/F_hardtanh.cpp

|

||||

pass_level2/F_instance_norm.cpp

|

||||

pass_level2/F_interpolate.cpp

|

||||

pass_level2/F_layer_norm.cpp

|

||||

pass_level2/F_leaky_relu.cpp

|

||||

pass_level2/F_linear.cpp

|

||||

pass_level2/F_local_response_norm.cpp

|

||||

pass_level2/F_log_softmax.cpp

|

||||

pass_level2/F_logsigmoid.cpp

|

||||

pass_level2/F_lp_pool1d.cpp

|

||||

pass_level2/F_lp_pool2d.cpp

|

||||

pass_level2/F_max_pool1d.cpp

|

||||

pass_level2/F_max_pool2d.cpp

|

||||

pass_level2/F_max_pool3d.cpp

|

||||

pass_level2/F_mish.cpp

|

||||

pass_level2/F_normalize.cpp

|

||||

pass_level2/F_pad.cpp

|

||||

pass_level2/F_pixel_shuffle.cpp

|

||||

pass_level2/F_pixel_unshuffle.cpp

|

||||

pass_level2/F_prelu.cpp

|

||||

pass_level2/F_relu.cpp

|

||||

pass_level2/F_relu6.cpp

|

||||

pass_level2/F_rrelu.cpp

|

||||

pass_level2/F_selu.cpp

|

||||

pass_level2/F_sigmoid.cpp

|

||||

pass_level2/F_silu.cpp

|

||||

pass_level2/F_softmax.cpp

|

||||

pass_level2/F_softmin.cpp

|

||||

pass_level2/F_softplus.cpp

|

||||

pass_level2/F_softshrink.cpp

|

||||

pass_level2/F_softsign.cpp

|

||||

pass_level2/F_tanh.cpp

|

||||

pass_level2/F_tanhshrink.cpp

|

||||

pass_level2/F_threshold.cpp

|

||||

pass_level2/F_upsample_bilinear.cpp

|

||||

pass_level2/F_upsample_nearest.cpp

|

||||

pass_level2/F_upsample.cpp

|

||||

pass_level2/Tensor_contiguous.cpp

|

||||

pass_level2/Tensor_expand.cpp

|

||||

pass_level2/Tensor_expand_as.cpp

|

||||

pass_level2/Tensor_index.cpp

|

||||

pass_level2/Tensor_new_empty.cpp

|

||||

pass_level2/Tensor_repeat.cpp

|

||||

pass_level2/Tensor_reshape.cpp

|

||||

pass_level2/Tensor_select.cpp

|

||||

pass_level2/Tensor_slice.cpp

|

||||

pass_level2/Tensor_view.cpp

|

||||

pass_level2/torch_addmm.cpp

|

||||

pass_level2/torch_amax.cpp

|

||||

pass_level2/torch_amin.cpp

|

||||

pass_level2/torch_arange.cpp

|

||||

pass_level2/torch_argmax.cpp

|

||||

pass_level2/torch_argmin.cpp

|

||||

pass_level2/torch_cat.cpp

|

||||

pass_level2/torch_chunk.cpp

|

||||

pass_level2/torch_clamp.cpp

|

||||

pass_level2/torch_clone.cpp

|

||||

pass_level2/torch_dequantize.cpp

|

||||

pass_level2/torch_empty.cpp

|

||||

pass_level2/torch_empty_like.cpp

|

||||

pass_level2/torch_flatten.cpp

|

||||

pass_level2/torch_flip.cpp

|

||||

pass_level2/torch_full.cpp

|

||||

pass_level2/torch_full_like.cpp

|

||||

pass_level2/torch_logsumexp.cpp

|

||||

pass_level2/torch_matmul.cpp

|

||||

pass_level2/torch_mean.cpp

|

||||

pass_level2/torch_norm.cpp

|

||||

pass_level2/torch_normal.cpp

|

||||

pass_level2/torch_ones.cpp

|

||||

pass_level2/torch_ones_like.cpp

|

||||

pass_level2/torch_prod.cpp

|

||||

pass_level2/torch_quantize_per_tensor.cpp

|

||||

pass_level2/torch_randn.cpp

|

||||

pass_level2/torch_randn_like.cpp

|

||||

pass_level2/torch_roll.cpp

|

||||

pass_level2/torch_split.cpp

|

||||

pass_level2/torch_squeeze.cpp

|

||||

pass_level2/torch_stack.cpp

|

||||

pass_level2/torch_sum.cpp

|

||||

pass_level2/torch_permute.cpp

|

||||

pass_level2/torch_transpose.cpp

|

||||

pass_level2/torch_unbind.cpp

|

||||

pass_level2/torch_unsqueeze.cpp

|

||||

pass_level2/torch_var.cpp

|

||||

pass_level2/torch_zeros.cpp

|

||||

pass_level2/torch_zeros_like.cpp

|

||||

|

||||

pass_level2/nn_quantized_FloatFunctional.cpp

|

||||

)

|

||||

|

||||

set(pnnx_pass_level3_SRCS

|

||||

pass_level3/assign_unique_name.cpp

|

||||

pass_level3/eliminate_noop_math.cpp

|

||||

pass_level3/eliminate_tuple_pair.cpp

|

||||

pass_level3/expand_quantization_modules.cpp

|

||||

pass_level3/fuse_cat_stack_tensors.cpp

|

||||

pass_level3/fuse_chunk_split_unbind_unpack.cpp

|

||||

pass_level3/fuse_expression.cpp

|

||||

pass_level3/fuse_index_expression.cpp

|

||||

pass_level3/fuse_rnn_unpack.cpp

|

||||

pass_level3/rename_F_conv_transposend.cpp

|

||||

pass_level3/rename_F_convmode.cpp

|

||||

pass_level3/rename_F_dropoutnd.cpp

|

||||

)

|

||||

|

||||

set(pnnx_pass_level4_SRCS

|

||||

pass_level4/canonicalize.cpp

|

||||

pass_level4/dead_code_elimination.cpp

|

||||

pass_level4/fuse_custom_op.cpp

|

||||

)

|

||||

|

||||

set(pnnx_pass_level5_SRCS

|

||||

pass_level5/eliminate_dropout.cpp

|

||||

pass_level5/eliminate_identity_operator.cpp

|

||||

pass_level5/eliminate_maxpool_indices.cpp

|

||||

pass_level5/eliminate_noop_expression.cpp

|

||||

pass_level5/eliminate_noop_pad.cpp

|

||||

pass_level5/eliminate_slice.cpp

|

||||

pass_level5/eliminate_view_reshape.cpp

|

||||

pass_level5/eval_expression.cpp

|

||||

pass_level5/fold_constants.cpp

|

||||

pass_level5/fuse_channel_shuffle.cpp

|

||||

pass_level5/fuse_constant_expression.cpp

|

||||

pass_level5/fuse_conv1d_batchnorm1d.cpp

|

||||

pass_level5/fuse_conv2d_batchnorm2d.cpp

|

||||

pass_level5/fuse_convtranspose1d_batchnorm1d.cpp

|

||||

pass_level5/fuse_convtranspose2d_batchnorm2d.cpp

|

||||

pass_level5/fuse_contiguous_view.cpp

|

||||

pass_level5/fuse_linear_batchnorm1d.cpp

|

||||

pass_level5/fuse_select_to_unbind.cpp

|

||||

pass_level5/fuse_slice_indices.cpp

|

||||

pass_level5/unroll_rnn_op.cpp

|

||||

)

|

||||

|

||||

set(pnnx_pass_ncnn_SRCS

|

||||

pass_ncnn/convert_attribute.cpp

|

||||

pass_ncnn/convert_custom_op.cpp

|

||||

pass_ncnn/convert_half_to_float.cpp

|

||||

pass_ncnn/convert_input.cpp

|

||||

pass_ncnn/convert_torch_cat.cpp

|

||||

pass_ncnn/convert_torch_chunk.cpp

|

||||

pass_ncnn/convert_torch_split.cpp

|

||||

pass_ncnn/convert_torch_unbind.cpp

|

||||

pass_ncnn/eliminate_output.cpp

|

||||

pass_ncnn/expand_expression.cpp

|

||||

pass_ncnn/insert_split.cpp

|

||||

pass_ncnn/chain_multi_output.cpp

|

||||

pass_ncnn/solve_batch_index.cpp

|

||||

|

||||

pass_ncnn/eliminate_noop.cpp

|

||||

pass_ncnn/eliminate_tail_reshape_permute.cpp

|

||||

pass_ncnn/fuse_convolution_activation.cpp

|

||||

pass_ncnn/fuse_convolution1d_activation.cpp

|

||||

pass_ncnn/fuse_convolutiondepthwise_activation.cpp

|

||||

pass_ncnn/fuse_convolutiondepthwise1d_activation.cpp

|

||||

pass_ncnn/fuse_deconvolution_activation.cpp

|

||||

pass_ncnn/fuse_deconvolutiondepthwise_activation.cpp

|

||||

pass_ncnn/fuse_innerproduct_activation.cpp

|

||||

pass_ncnn/fuse_transpose_matmul.cpp

|

||||

pass_ncnn/insert_reshape_pooling.cpp

|

||||

|

||||

pass_ncnn/F_adaptive_avg_pool1d.cpp

|

||||

pass_ncnn/F_adaptive_avg_pool2d.cpp

|

||||

pass_ncnn/F_adaptive_avg_pool3d.cpp

|

||||

pass_ncnn/F_adaptive_max_pool1d.cpp

|

||||

pass_ncnn/F_adaptive_max_pool2d.cpp

|

||||

pass_ncnn/F_adaptive_max_pool3d.cpp

|

||||

pass_ncnn/F_avg_pool1d.cpp

|

||||

pass_ncnn/F_avg_pool2d.cpp

|

||||

pass_ncnn/F_avg_pool3d.cpp

|

||||

pass_ncnn/F_batch_norm.cpp

|

||||

pass_ncnn/F_conv_transpose1d.cpp

|

||||

pass_ncnn/F_conv_transpose2d.cpp

|

||||

pass_ncnn/F_conv_transpose3d.cpp

|

||||

pass_ncnn/F_conv1d.cpp

|

||||

pass_ncnn/F_conv2d.cpp

|

||||

pass_ncnn/F_conv3d.cpp

|

||||

pass_ncnn/F_elu.cpp

|

||||

pass_ncnn/F_embedding.cpp

|

||||

pass_ncnn/F_gelu.cpp

|

||||

pass_ncnn/F_group_norm.cpp

|

||||

pass_ncnn/F_hardsigmoid.cpp

|

||||

pass_ncnn/F_hardswish.cpp

|

||||

pass_ncnn/F_hardtanh.cpp

|

||||

pass_ncnn/F_instance_norm.cpp

|

||||

pass_ncnn/F_interpolate.cpp

|

||||

pass_ncnn/F_layer_norm.cpp

|

||||

pass_ncnn/F_leaky_relu.cpp

|

||||

pass_ncnn/F_linear.cpp

|

||||

pass_ncnn/F_local_response_norm.cpp

|

||||

pass_ncnn/F_max_pool1d.cpp

|

||||

pass_ncnn/F_max_pool2d.cpp

|

||||

pass_ncnn/F_max_pool3d.cpp

|

||||

pass_ncnn/F_mish.cpp

|

||||

pass_ncnn/F_normalize.cpp

|

||||

pass_ncnn/F_pad.cpp

|

||||

pass_ncnn/F_pixel_shuffle.cpp

|

||||

pass_ncnn/F_pixel_unshuffle.cpp

|

||||

pass_ncnn/F_prelu.cpp

|

||||

pass_ncnn/F_relu.cpp

|

||||

pass_ncnn/F_relu6.cpp

|

||||

pass_ncnn/F_selu.cpp

|

||||

pass_ncnn/F_sigmoid.cpp

|

||||

pass_ncnn/F_silu.cpp

|

||||

pass_ncnn/F_softmax.cpp

|

||||

pass_ncnn/F_tanh.cpp

|

||||

pass_ncnn/F_upsample_bilinear.cpp

|

||||

pass_ncnn/F_upsample_nearest.cpp

|

||||

pass_ncnn/F_upsample.cpp

|

||||

pass_ncnn/nn_AdaptiveAvgPool1d.cpp

|

||||

pass_ncnn/nn_AdaptiveAvgPool2d.cpp

|

||||

pass_ncnn/nn_AdaptiveAvgPool3d.cpp

|

||||

pass_ncnn/nn_AdaptiveMaxPool1d.cpp

|

||||

pass_ncnn/nn_AdaptiveMaxPool2d.cpp

|

||||

pass_ncnn/nn_AdaptiveMaxPool3d.cpp

|

||||

pass_ncnn/nn_AvgPool1d.cpp

|

||||

pass_ncnn/nn_AvgPool2d.cpp

|

||||

pass_ncnn/nn_AvgPool3d.cpp

|

||||

pass_ncnn/nn_BatchNorm1d.cpp

|

||||

pass_ncnn/nn_BatchNorm2d.cpp

|

||||

pass_ncnn/nn_BatchNorm3d.cpp

|

||||

pass_ncnn/nn_ChannelShuffle.cpp

|

||||

pass_ncnn/nn_ConstantPad1d.cpp

|

||||

pass_ncnn/nn_ConstantPad2d.cpp

|

||||

pass_ncnn/nn_ConstantPad3d.cpp

|

||||

pass_ncnn/nn_Conv1d.cpp

|

||||

pass_ncnn/nn_Conv2d.cpp

|

||||

pass_ncnn/nn_Conv3d.cpp

|

||||

pass_ncnn/nn_ConvTranspose1d.cpp

|

||||

pass_ncnn/nn_ConvTranspose2d.cpp

|

||||

pass_ncnn/nn_ConvTranspose3d.cpp

|

||||

pass_ncnn/nn_ELU.cpp

|

||||

pass_ncnn/nn_Embedding.cpp

|

||||

pass_ncnn/nn_GELU.cpp

|

||||

pass_ncnn/nn_GroupNorm.cpp

|

||||

pass_ncnn/nn_GRU.cpp

|

||||

pass_ncnn/nn_Hardsigmoid.cpp

|

||||

pass_ncnn/nn_Hardswish.cpp

|

||||

pass_ncnn/nn_Hardtanh.cpp

|

||||

pass_ncnn/nn_InstanceNorm2d.cpp

|

||||

pass_ncnn/nn_LayerNorm.cpp

|

||||

pass_ncnn/nn_LeakyReLU.cpp

|

||||

pass_ncnn/nn_Linear.cpp

|

||||

pass_ncnn/nn_LocalResponseNorm.cpp

|

||||

pass_ncnn/nn_LSTM.cpp

|

||||

pass_ncnn/nn_MaxPool1d.cpp

|

||||

pass_ncnn/nn_MaxPool2d.cpp

|

||||

pass_ncnn/nn_MaxPool3d.cpp

|

||||

pass_ncnn/nn_Mish.cpp

|

||||

pass_ncnn/nn_MultiheadAttention.cpp

|

||||

pass_ncnn/nn_PixelShuffle.cpp

|

||||

pass_ncnn/nn_PixelUnshuffle.cpp

|

||||

pass_ncnn/nn_PReLU.cpp

|

||||

pass_ncnn/nn_ReflectionPad1d.cpp

|

||||

pass_ncnn/nn_ReflectionPad2d.cpp

|

||||

pass_ncnn/nn_ReLU.cpp

|

||||

pass_ncnn/nn_ReLU6.cpp

|

||||

pass_ncnn/nn_ReplicationPad1d.cpp

|

||||

pass_ncnn/nn_ReplicationPad2d.cpp

|

||||

pass_ncnn/nn_RNN.cpp

|

||||

pass_ncnn/nn_SELU.cpp

|

||||

pass_ncnn/nn_Sigmoid.cpp

|

||||

pass_ncnn/nn_SiLU.cpp

|

||||

pass_ncnn/nn_Softmax.cpp

|

||||

pass_ncnn/nn_Tanh.cpp

|

||||

pass_ncnn/nn_Upsample.cpp

|

||||

pass_ncnn/nn_UpsamplingBilinear2d.cpp

|

||||

pass_ncnn/nn_UpsamplingNearest2d.cpp

|

||||

pass_ncnn/nn_ZeroPad2d.cpp

|

||||

pass_ncnn/Tensor_contiguous.cpp

|

||||

pass_ncnn/Tensor_reshape.cpp

|

||||

pass_ncnn/Tensor_repeat.cpp

|

||||

pass_ncnn/Tensor_slice.cpp

|

||||

pass_ncnn/Tensor_view.cpp

|

||||

pass_ncnn/torch_addmm.cpp

|

||||

pass_ncnn/torch_amax.cpp

|

||||

pass_ncnn/torch_amin.cpp

|

||||

pass_ncnn/torch_clamp.cpp

|

||||

pass_ncnn/torch_clone.cpp

|

||||

pass_ncnn/torch_flatten.cpp

|

||||

pass_ncnn/torch_logsumexp.cpp

|

||||

pass_ncnn/torch_matmul.cpp

|

||||

pass_ncnn/torch_mean.cpp

|

||||

pass_ncnn/torch_permute.cpp

|

||||

pass_ncnn/torch_prod.cpp

|

||||

pass_ncnn/torch_squeeze.cpp

|

||||

pass_ncnn/torch_sum.cpp

|

||||

pass_ncnn/torch_transpose.cpp

|

||||

pass_ncnn/torch_unsqueeze.cpp

|

||||

)

|

||||

|

||||

set(pnnx_SRCS

|

||||

main.cpp

|

||||

ir.cpp

|

||||

storezip.cpp

|

||||

utils.cpp

|

||||

|

||||

pass_level0.cpp

|

||||

pass_level1.cpp

|

||||

pass_level2.cpp

|

||||

pass_level3.cpp

|

||||

pass_level4.cpp

|

||||

pass_level5.cpp

|

||||

|

||||

pass_ncnn.cpp

|

||||

|

||||

${pnnx_pass_level0_SRCS}

|

||||

${pnnx_pass_level1_SRCS}

|

||||

${pnnx_pass_level2_SRCS}

|

||||

${pnnx_pass_level3_SRCS}

|

||||

${pnnx_pass_level4_SRCS}

|

||||

${pnnx_pass_level5_SRCS}

|

||||

|

||||

${pnnx_pass_ncnn_SRCS}

|

||||

)

|

||||

|

||||

if(NOT MSVC)

|

||||

add_definitions(-Wall -Wextra)

|

||||

endif()

|

||||

|

||||

add_executable(pnnx ${pnnx_SRCS})

|

||||

|

||||

if(PNNX_COVERAGE)

|

||||

target_compile_options(pnnx PUBLIC -coverage -fprofile-arcs -ftest-coverage)

|

||||

target_link_libraries(pnnx PUBLIC -coverage -lgcov)

|

||||

endif()

|

||||

|

||||

if(WIN32)

|

||||

target_compile_definitions(pnnx PUBLIC NOMINMAX)

|

||||

endif()

|

||||

|

||||

if(TorchVision_FOUND)

|

||||

target_link_libraries(pnnx PRIVATE TorchVision::TorchVision)

|

||||

endif()

|

||||

|

||||

if(WIN32)

|

||||

target_link_libraries(pnnx PRIVATE ${TORCH_LIBRARIES})

|

||||

else()

|

||||

target_link_libraries(pnnx PRIVATE ${TORCH_LIBRARIES} pthread dl)

|

||||

endif()

|

||||

|

||||

#set_target_properties(pnnx PROPERTIES COMPILE_FLAGS -fsanitize=address)

|

||||

#set_target_properties(pnnx PROPERTIES LINK_FLAGS -fsanitize=address)

|

||||

|

||||

if(APPLE)

|

||||

set_target_properties(pnnx PROPERTIES INSTALL_RPATH "@executable_path/")

|

||||

else()

|

||||

set_target_properties(pnnx PROPERTIES INSTALL_RPATH "$ORIGIN/")

|

||||

endif()

|

||||

set_target_properties(pnnx PROPERTIES MACOSX_RPATH TRUE)

|

||||

|

||||

install(TARGETS pnnx RUNTIME DESTINATION bin)

|

||||

|

||||

if (WIN32)

|

||||

file(GLOB TORCH_DLL "${TORCH_INSTALL_PREFIX}/lib/*.dll")

|

||||

install(FILES ${TORCH_DLL} DESTINATION bin)

|

||||

endif()

|

||||

2597

3rdparty/ncnn/tools/pnnx/src/ir.cpp

vendored

Normal file

2597

3rdparty/ncnn/tools/pnnx/src/ir.cpp

vendored

Normal file

File diff suppressed because it is too large

Load Diff

242

3rdparty/ncnn/tools/pnnx/src/ir.h

vendored

Normal file

242

3rdparty/ncnn/tools/pnnx/src/ir.h

vendored

Normal file

@@ -0,0 +1,242 @@

|

||||

// Tencent is pleased to support the open source community by making ncnn available.

|

||||

//

|

||||

// Copyright (C) 2021 THL A29 Limited, a Tencent company. All rights reserved.

|

||||

//

|

||||

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

|

||||

// in compliance with the License. You may obtain a copy of the License at

|

||||

//

|

||||

// https://opensource.org/licenses/BSD-3-Clause

|

||||

//

|

||||

// Unless required by applicable law or agreed to in writing, software distributed

|

||||

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

|

||||

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

// specific language governing permissions and limitations under the License.

|

||||

|

||||

#ifndef PNNX_IR_H

|

||||

#define PNNX_IR_H

|

||||

|

||||

#include <initializer_list>

|

||||

#include <map>

|

||||

#include <string>

|

||||

#include <vector>

|

||||

|

||||

namespace torch {

|

||||

namespace jit {

|

||||

struct Value;

|

||||

struct Node;

|

||||

} // namespace jit

|

||||

} // namespace torch

|

||||

namespace at {

|

||||

class Tensor;

|

||||

}

|

||||

|

||||

namespace pnnx {

|

||||

|

||||

class Parameter

|

||||

{

|

||||

public:

|

||||

Parameter()

|

||||

: type(0)

|

||||

{

|

||||

}

|

||||

Parameter(bool _b)

|

||||

: type(1), b(_b)

|

||||

{

|

||||

}

|

||||

Parameter(int _i)

|

||||

: type(2), i(_i)

|

||||

{

|

||||

}

|

||||

Parameter(long _l)

|

||||

: type(2), i(_l)

|

||||

{

|

||||

}

|

||||

Parameter(long long _l)

|

||||

: type(2), i(_l)

|

||||

{

|

||||

}

|

||||

Parameter(float _f)

|

||||

: type(3), f(_f)

|

||||

{

|

||||

}

|

||||

Parameter(double _d)

|

||||

: type(3), f(_d)

|

||||

{

|

||||

}

|

||||

Parameter(const char* _s)

|

||||

: type(4), s(_s)

|

||||

{

|

||||

}

|

||||

Parameter(const std::string& _s)

|

||||

: type(4), s(_s)

|

||||

{

|

||||

}

|

||||

Parameter(const std::initializer_list<int>& _ai)

|

||||

: type(5), ai(_ai)

|

||||

{

|

||||

}

|

||||

Parameter(const std::initializer_list<int64_t>& _ai)

|

||||

: type(5)

|

||||

{

|

||||

for (const auto& x : _ai)

|

||||

ai.push_back((int)x);

|

||||

}

|

||||

Parameter(const std::vector<int>& _ai)

|

||||

: type(5), ai(_ai)

|

||||

{

|

||||

}

|

||||

Parameter(const std::initializer_list<float>& _af)

|

||||

: type(6), af(_af)

|

||||

{

|

||||

}

|

||||

Parameter(const std::initializer_list<double>& _af)

|

||||

: type(6)

|

||||

{

|

||||

for (const auto& x : _af)

|

||||

af.push_back((float)x);

|

||||

}

|

||||